Feb 26, 2025

6 min read

AI voice agents are transforming global communication by breaking down language barriers. Here's why multilingual AI matters and how it works:

Why It Matters: 74% of consumers prefer after-sales support in their language, and 76% are more likely to buy when information is in their native language. Businesses risk losing billions without multilingual capabilities.

Key Challenges: Handling accents, dialects, and cultural nuances while maintaining response accuracy.

How It Works: AI systems use technologies like Speech Recognition, NLP, and Neural Machine Translation for real-time language detection, translation, and seamless conversation flow.

Benefits for Businesses: Improved customer satisfaction, reduced costs, and expanded global reach. For instance, Brilo AI increased CSAT by 15% and achieved 70% first-call resolution in just three months.

Multilingual AI voice agents are essential for businesses aiming to thrive in global markets.

Language Processing Methods in AI Voice Systems

Modern AI voice systems rely on advanced language processing to handle multilingual conversations seamlessly. With the global NLP market projected to hit $43.3 billion by 2025, these technologies are becoming central to business communications.

Natural Language Processing Basics

Natural Language Processing (NLP) is at the heart of multilingual voice agents, helping them interpret input and generate responses. These systems combine several key components:

For instance, IBM's watsonx Assistant uses these technologies to cut average handle times by 20%, offering businesses noticeable cost reductions.

"NLP is a branch of artificial intelligence that enables voice bots to understand, interpret, and respond to human language in a natural and meaningful way." - SquadStack

Translation and Speech Recognition Tools

Translation and speech recognition tools have progressed from basic text conversion to delivering highly accurate, near-instant results. Current systems can achieve around 85% accuracy within five seconds of receiving input. Leading tech companies have significantly advanced this field:

Microsoft Translator: Supports over 100 languages

Apple's Translate: Launched with 11 languages

Google's Pixel Buds: Offers real-time translation across dozens of languages

Amazon's Live Translate: Works with English, Spanish, German, French, Hindi, Italian, and Brazilian Portuguese

These advancements are powered by Neural Machine Translation (NMT) and the Transformer model introduced in 2017, which have greatly improved translation accuracy.

A real-world example is Vapi.ai, a Deepgram partner, which enables businesses to deploy multilingual agents without hiring language-specific staff. Their approach efficiently scales language support using modern NLP tools.

To maximize performance across languages, these systems focus on:

Training with diverse, accent-inclusive datasets

Using phonetic analysis for accurate speech recognition

Applying advanced contextual understanding to resolve ambiguities

This strong language processing framework supports dynamic language switching, making conversational AI more effective and versatile.

How AI Agents Switch Between Languages

Language Detection Systems

Today's AI voice agents rely on advanced systems that combine Automatic Speech Recognition (ASR) and Natural Language Processing (NLP) to detect languages in real time. These technologies work together to identify the speaker's language and craft appropriate responses .

Here’s how the process works:

ASR converts spoken words into text.

Intent recognition identifies the purpose behind the conversation.

Entity extraction pulls out key details, like names or dates.

Context management ensures the conversation flows naturally without losing important information.

One impressive development in this area is VALL-E X, a cross-lingual neural codec model. It can generate high-quality speech in another language using just a single speech sample .

Managing Multi-Language Conversations

Once an AI agent detects the language, it must also handle multilingual conversations smoothly. This is especially important in settings like customer service, where clear communication is critical. For instance, AI Accent Localization is expected to help over 100,000 call center workers in 2024 by addressing challenges like accent variations. This can reduce customer frustration and shorten call times .

Key elements for managing multilingual conversations include:

Real-time Language Processing: AI platforms quickly identify and address communication challenges, adjusting responses instantly .

Context Preservation: Advanced systems track shifts in the conversation, ensuring important details don’t get lost .

"You're not replacing like for like on telephony. What you're buying is the opportunity to extend your business processes. You're buying yourself access to data that you don't currently have" - Sam Wilson, CTO

Technologies like zero-shot cross-lingual synthesis allow instant translation and voice generation without the need for prior training on specific language pairs. Other tools, such as Language ID control, handle accents effectively, while real-time agent assistance ensures compliance and identifies new opportunities during conversations .

Setting Up Language-Specific Voice Agents

Configuring voice agents to handle the nuances of different languages is key to improving customer interactions. This process relies heavily on advanced language processing techniques.

Language Data Training Methods

Training AI voice agents starts with creating a diverse and high-quality audio dataset that includes a range of accents and dialects. Doing this can improve customer satisfaction by 68% and speed up issue resolution by 31% .

The success of language training hinges on the quality and variety of data. For instance, the Common Voice dataset contains 7,327 validated hours of recordings in 60 languages, complete with metadata like age, gender, and accent. This level of detail allows for more precise language processing .

Key considerations include:

Data Quality Control: Use preprocessing steps to clean audio data, ensuring consistent quality across all language variations.

Accent Variation: Incorporate regional accents for better recognition accuracy.

Cultural Context: Add regional expressions and cultural nuances to make interactions feel more natural.

Regional Communication Standards

Customizing AI voice agents for regional use involves more than just translation. It requires meeting local regulations and aligning with cultural preferences. For example, companies operating globally must comply with rules like GDPR in Europe and CCPA in California .

A structured approach to meeting these regional requirements includes:

"There are still issues, especially with data privacy and ensuring interactions are human-like. In addition to resolving problems with bias in training data and regulatory compliance, businesses must strike a balance between automation and personalization. For example, adhering to GDPR regarding the storage of voice data can be challenging, but doing so is essential to fostering trust." – Arvind Rongala, Founder of a skill-management solution provider

To maintain effective multilingual support, companies should regularly perform sentiment analysis for each language channel . This practice has delivered results, such as Webex achieving 92% context retention accuracy across 18 languages .

Features like geolocation-based language adaptation further enhance regional customization. These tools automatically adjust to local preferences and communication styles, helping businesses cut regional operation costs by 40–65% . This setup ensures voice agents remain responsive and aligned with the needs of diverse markets.

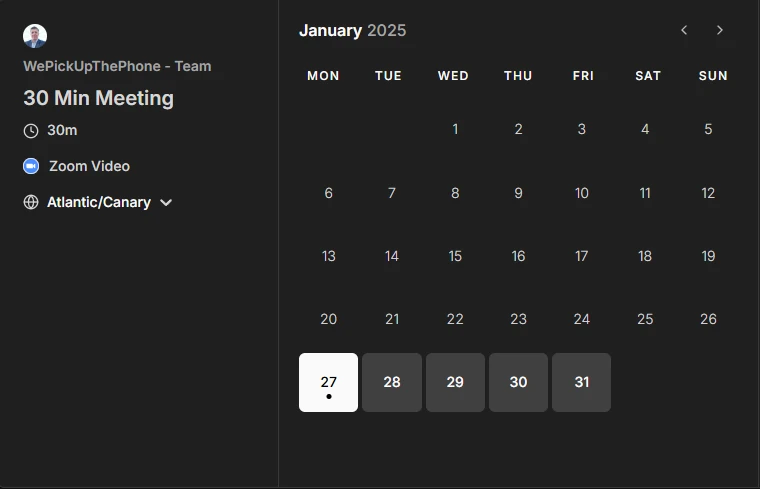

WePickUpThePhone Voice Agents: Multi-Language Business Support

Platform Capabilities

WePickUpThePhone's AI voice agents use advanced natural language processing (NLP) to provide multilingual support for businesses across various industries. By combining NLP with real-time translation, the platform simplifies complex language challenges, ensuring smooth and effective customer interactions. With over 200 voices in 32 languages and seamless integration into existing systems, it offers localized and authentic experiences tailored to diverse audiences.

Key Features:

These features make the platform suitable for a wide range of industries and applications.

Industry Use Cases

The platform has proven its value across different sectors. For instance, a waste collection company saw a 30% increase in revenue and a 50% rise in completed jobs after adopting multilingual voice agents .

"Our AI-Assisted Voice Agents are not just another technology upgrade - they represent a fundamental shift in how companies connect with their customers, offering unparalleled convenience and reliability." – Jon Billington, Director at OnRiseDigital LTD

Here’s how various industries benefit from these capabilities:

With automation workflows and real-time updates, the platform ensures consistent communication quality across all supported languages, helping businesses stay connected with their diverse audiences.

Conclusion: Impact of Multi-Language AI Voice Support

Key Takeaways

Multilingual AI voice agents are changing the game in global customer service. Consider this: 74% of consumers are more likely to shop again, and 76% are more likely to make a purchase when supported in their native language . The benefits of these solutions are clear and measurable across industries:

These results highlight the current success of AI voice solutions and pave the way for advancements in the field. The future of AI language support is set to deliver even more integrated and effective tools.

What’s Next for AI Language Support?

The evolution of multilingual AI voice support is accelerating, with exciting developments on the horizon. Olivia Moore, Partner, emphasizes the potential here:

"Voice is one of the most powerful unlocks for AI application companies. It is the most frequent (and most information-dense) form of human communication, made 'programmable' for the first time due to AI."

Emerging trends to watch include:

Multimodal Integration: AI systems are expanding beyond voice to include platforms like email, web chat, and text .

Improved Emotional Intelligence: AI agents are becoming more empathetic and better equipped to handle sensitive customer interactions .

Complex Scenario Handling: Enhancements in resolving edge cases are boosting overall system reliability .

Real-world success stories reinforce the impact of these advancements. Take Fujita Kanko, a Japanese hotel chain: after deploying a multilingual AI concierge system supporting Japanese, English, Chinese, and Korean, they reported a 97% customer satisfaction score . This underscores how breaking language barriers can transform customer service experiences.